Compute Shader Graph in Unity

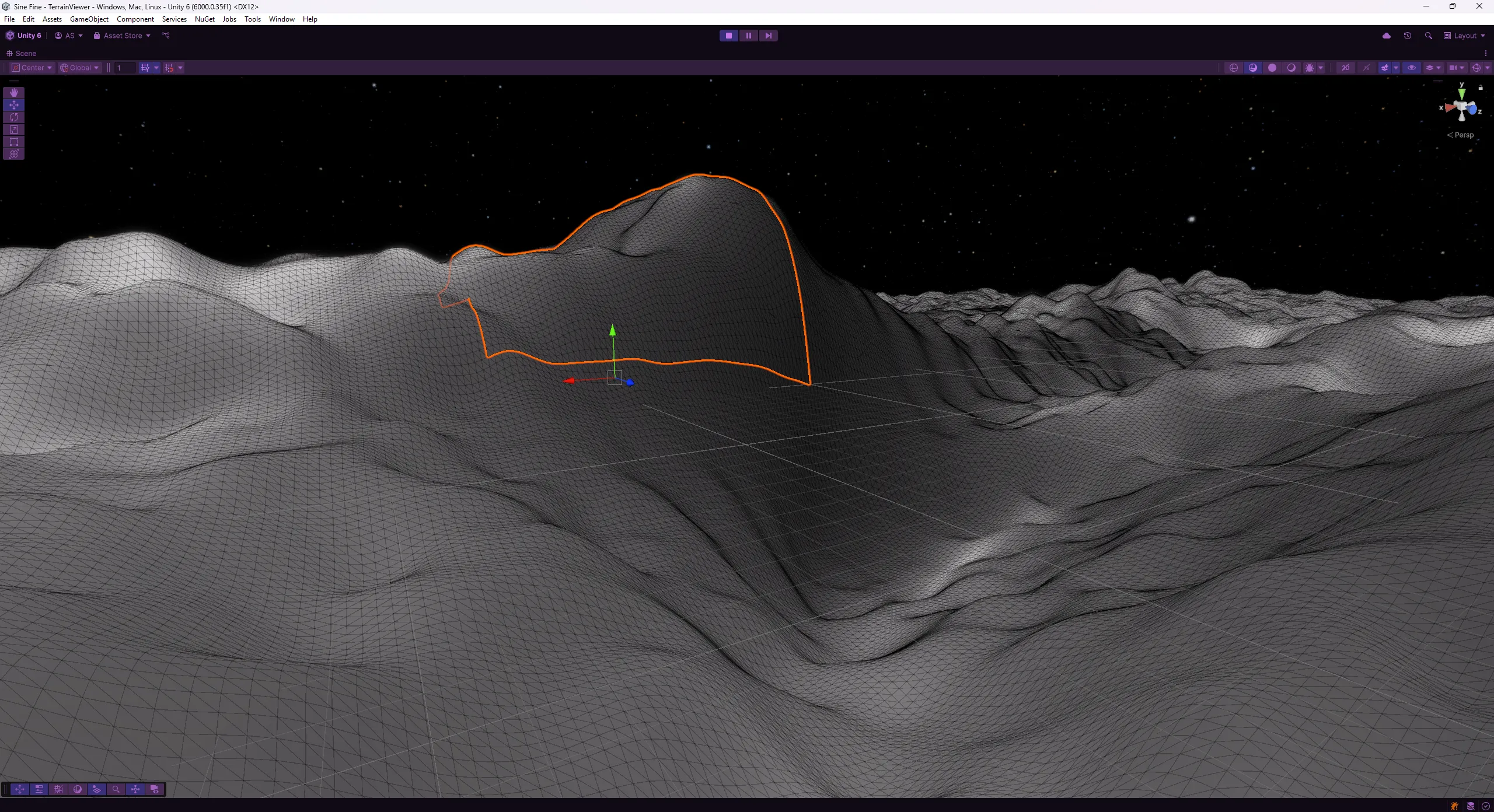

Sine Fine will feature a terrain system for the base-building aspect of the game, so that the player will be able to view planets not only from space, but also from the surface. In order to avoid “scope creep”, since it is a slippery slope going from a “simple” 3D terrain system to a No Man’s Sky-like game in an infinite procedural universe, for now my intention is to use it mainly as a system to visualise the layout of the outpost itself. Something in between the “city view” of the first Civilizations or Master of Orion 2 and the more contemporary outpost visualization of Terra Invicta.

To limit the amount of work necessary, the system will have these constraints:

- Staying true to the meaning of the word, these will be actual outposts that an AI “being” might build on extra-solar planets for a variety of purposes, such as sample collection, production, observation, etc.

- Consequently, the player will not be able to explore much farther than the perimeter of the base. Although the terrain system I developed (as part of another prototype) supports the basic requirements (being based on a quadtree, and potentially on a cube sphere, a mechanism to further split and “recycle” terrain chunks, etc.) this will allow me to keep it simple and create just the neighbourhood of the base.

- Since the objective of the game is to find an Earthlike-planet, the majority of such outposts will be built on uninhabitable planets. This means I will not need to simulate all the complications of Earthlike-planets, such as vegetation, atmosphere, potential wildlife, tectonic plates, atmospheric conditions etc.

However, a basic Fractal Brownian Motion (fbm) can look quite plain (!) and indeed many terrain-generation systems use some kind of layer composition of different types of noise to create more interesting and varied terrain.

For this reason, I started looking into Compute Shaders in Unity. They seem to be super fast: currently a chunk resulting from 64 subdivisions (4225 vertices) takes on average less than 1 ms on my RTX 4080 system (multiplied by the number of chunks). So a fairly large terrain can be generated in almost no time. However, this is with a relatively simple fbm based on 8 octaves of noise. It is likely that once you start adding layers, this time will increase. But if compared with generating chunks on the CPU, which could take even up to a second, this is a serious improvement.

A node-based terrain system?

The problem then becomes how to define these noise layers in Unity. I could simply write a few compute shaders for different situations, but that felt difficult to expand if my terrain requirement changed. Ideally, I would be able to define these layers using some kind of node-based tool, as many terrain-generation system have. However, I needed one tailored for alien-looking planets, and the majority of available tools are aimed at Earthlike terrains. So of course, what could have easily been 20 minutes of work became about two weeks of work. I decided to reinvent two wheels: the node-based terrain system and a graph editor.

It turns out that Unity exposes the GraphView API (albeit it is marked as experimental), which is the underlying API that enables users to create Shader Graphs. However Unity’s Shader Graph system is aimed only at pixel shaders and it does not allow you to generate the source code of a Compute Shader. There are various third party Unity Graph libraries, but many of them have not been updated in several years, so again I felt like I had to reinvent this wheel again because I was not sure how well those libraries would have helped me.

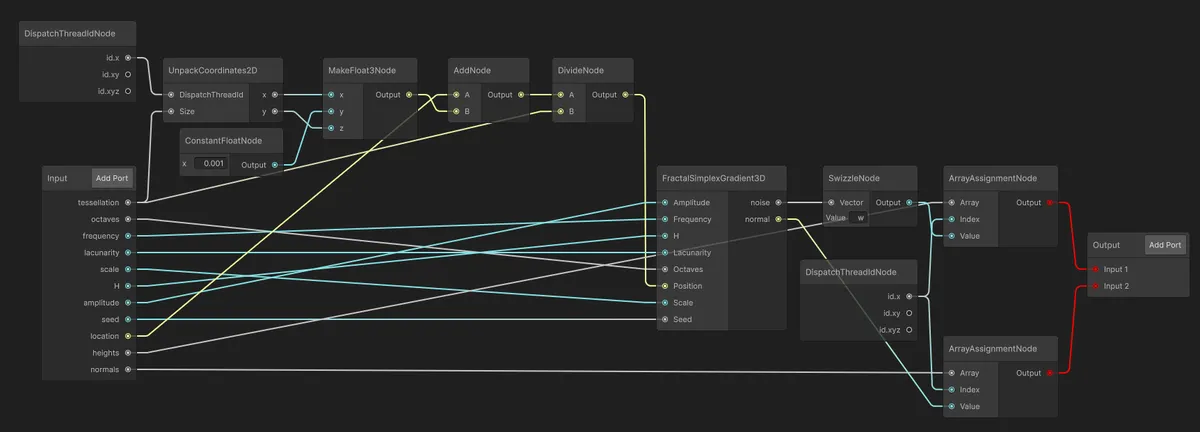

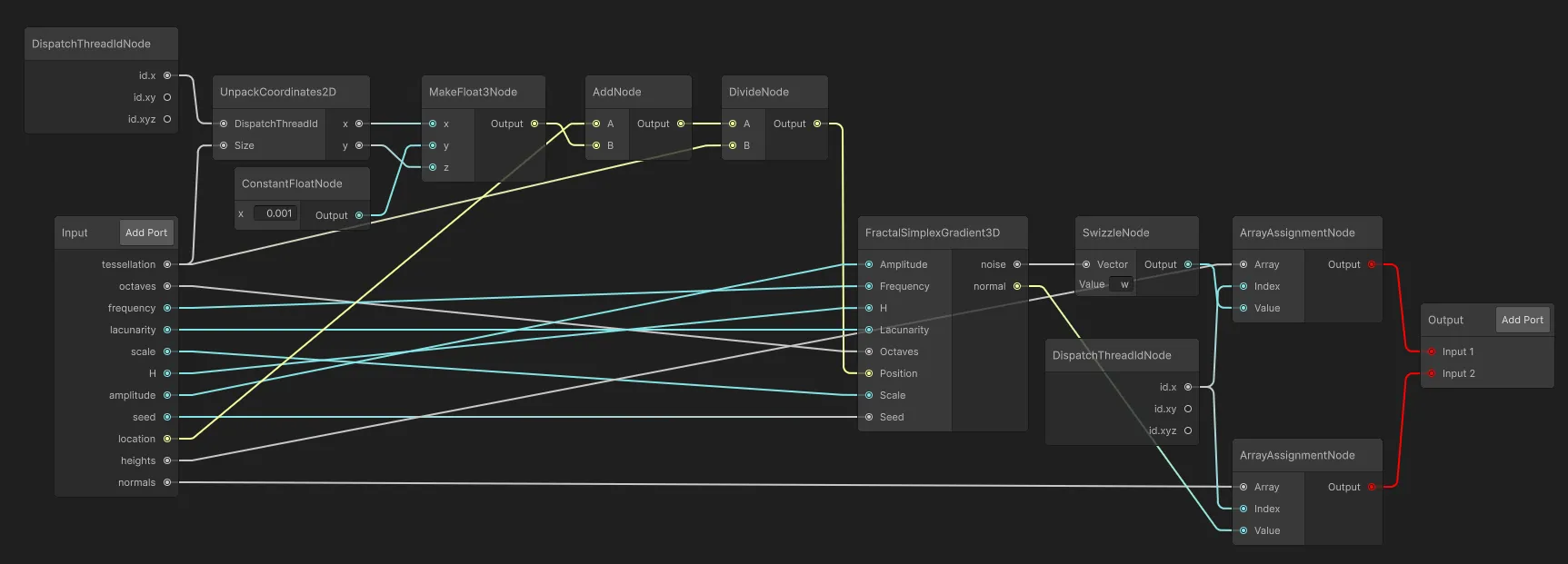

So I created a whole Compute Shader Graph-like editor with several nodes that when connected together will be able to generate the source code of a Compute Shader. Currently it has enough nodes to generate the source code of the compute shader that generates the terrain pictures in this page. The graph in the following picture generates the source code listed below.

The graph system currently allows you to specify an input node, to which you can add as many parameters as you want to pass from the “outside” code, and then uses the the thread id as coordinates for the generation of the noise. After a few standard mathematical operation nodes, such as Add, Divide, and a few “utility” nodes like Swizzle and ArrayAssignment I can then assign the result of those operations to the output node. Differently from a standard pixel shader, you do not return a set of specific values such as the albedo or emission colours, the normals, etc., but typically you would perform a complex operation and then assign the result to an array of values (or a texture), and then read the values back from the outside code. So, the output nodes tells the graph which nodes should actually be transformed into source code (the red lines). If a node is not connected to the output node, then all nodes that are not connected will not be generated.

The result is the following code:

#include "../HLSL/Noise/Noise3D.hlsl"

#pragma kernel Terrain

uint tessellation;

uint octaves;

float frequency;

float lacunarity;

float scale;

float H;

float amplitude;

float seed;

float3 location;

RWStructuredBuffer<float> heights;

RWStructuredBuffer<float3> normals;

[numthreads(64,1,1)]

void Terrain(uint3 id: SV_DispatchThreadID)

{

int x = id.x % tessellation;

int y = id.x / tessellation;

float3 f3_makefloat3node = float3(x, 0.001, y);

float3 f3_addnode = (location + f3_makefloat3node);

float3 f3_dividenode = f3_addnode / tessellation;

float4 noise = fbmGrad(f3_dividenode, octaves, scale, frequency, H, lacunarity, amplitude, seed);

float3 gradient = noise.xyz;

float3 normal = normalize(float3(-gradient.x, 1, -gradient.z));

normals[id.x] = normal;

heights[id.x] = noise.w;

}The source code is a bit “obfuscated” as you can see from the generic variable names. But it works, at least on this specific terrain compute shader. The 0.001 value in the f3_makefloat3node is to avoid some artifacts in the results of the noise function when run on integer coordinates.

There are still a few features that I need to add:

- some more quality features, such as making sure that the colour of all the ports matches the data types.

- the ability to create subgraphs, as in the “real” shader graph.

- the possibility to customise the variable names, to generate less obfuscated source code.

- other nodes that I do not know yet that I will need.

- more tests to make sure that I do not break anything, especially for the (de)serialization system.

What I have now should be sufficient to start building a node-based layer system, which will be the subject of the next devlog.